In our previous Daala demo page, we described TF, a mechanism that allows us to split and merge blocks in the frequency domain without recomputing any block transforms. Among other uses, TF gives us a novel means of sidestepping design complications stemming from use of multiple block sizes along with frequency-domain intra prediction.

Now that we have TF under our belt, we can look at another technique that relies on TF: Prediction of chroma planes from luma.

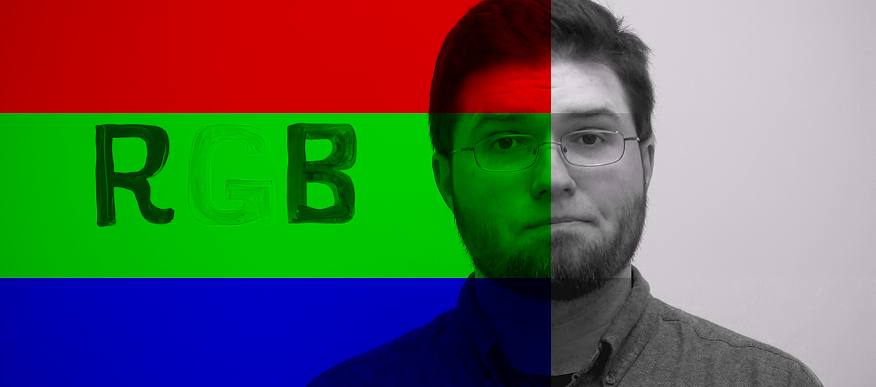

Although video displays use red, green, and blue primaries, video itself isn't typically coded as RGB because the red, green, and blue channels are highly correlated. That is, the red plane looks like a red version of the original picture, the green plane looks like a green version of the original picture, and the blue plane looks like a blue version of the original picture. We'd be encoding nearly the same picture three times in each frame.

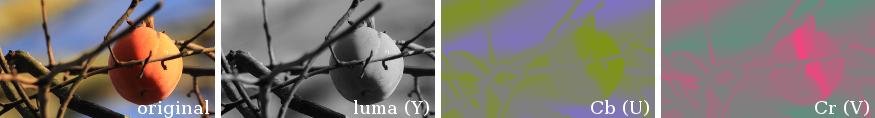

Most video representations reduce channel correlation by using a YUV-like color space. Y is the grayscale luma channel, a weighted sum of red, green, and blue. The chroma channels U and V subtract the luma signal from blue and the luma signal from red respectively. YUV substantially reduces coding redundancy across channels.

And yet, it's obvious looking at YUV decompositions of frames that edges in the luma plane still correlate with edges in the chroma planes. There's more cross-channel redundancy to eliminate.

Modern video codecs (VPX, h.264, HEVC) predict the chroma channels much the same way they predict the luma channel, using directional spatial prediction from neighboring block data. Luma predicts from luma, and chroma predicts from chroma. There's little or no attempt to account for remaining cross-channel correlation.

Academic papers have long explored ways to further reduce or exploit the channel correlation of YUV colorspaces. In 2009, the paper Intra Prediction Method Based on the Linear Relationship between the Channels for YUV 4:2:0 Intra Coding proposed prediction of chroma pixels from luma pixels using a simple linear model fitted from neighboring pixel data. The following year, LG Electronics recommended adoption of this technique within the proposed HEVC standard, where it became known as 'Chroma from Luma'.

The idea is deceptively and awesomely simple. Within small areas of an image, the chroma values of a pixel strongly correlate with the absolute luma value of that pixel. A simple y=α+Βx linear relationship makes an excellent predictor:

| PixelU[x,y] | = | αU + | ΒUPixelY[x,y] |

| PixelV[x,y] | = | αV + | ΒVPixelY[x,y] |

All we need to do is fit values of α and Β from neighboring pixels for each block that's to be predicted. The only complication is a straightforward digression into chroma subsampling (see the proposal for details; it rolls linear subsampling of luma into the prediction equation).

This spatial implementation of Chroma from Luma did not make it into the final HEVC standard. The additional computational expense (as much as 30% in the encoder) plus loss of luma/chroma plane parallelism concerned hardware implementors. More importantly, the HEVC evaluation process relied almost exclusively on PSNR measurements, and although Chroma from Luma produced a considerable subjective visual improvement, this obvious visual improvement was not reflected in a correspondingly large improvement in PSNR.

There's obvious perceptual value to Chroma from Luma prediction, but the technique proposed for HEVC wouldn't work in Daala. Even if the computational cost didn't concern us, it requires reconstructed pixels in the spatial domain, and we'd run into all the same lapping-related logistical problems that prevent us from performing intra prediction in space rather than frequency.

Thus, we need to perform Chroma from Luma in the frequency domain, just like intra prediction. As it turns out, a frequency-domain Chroma from Luma is considerably easier and faster than the spatial counterpart.

Once again, let's ignore chroma subsampling for the moment. Assume that our luma and chroma planes are the same size, and blocks in all three planes strictly co-located. Further assume that our transform has uniform scaling behavior (and our lapped transform does), and the linear prediction model across planes holds in the frequency domain. Coefficients of chroma are all scaled by the same factor Β compared to the luma coefficients, just as the chroma pixel values were. Even better, the offset α only appears in the DC coefficient, so our linear model becomes:

| DCU | = | αU + | ΒUDCY |

| ACU[x,y] | = | ΒUACY[x,y] | |

| DCV | = | αV + | ΒVDCY |

| ACV[x,y] | = | ΒVACY[x,y] |

Of course, chroma planes are usually subsampled in actual video. This is where TF comes in.

Using 4:2:0 video as an example, U and V plane resolution is halved both vertically and horizontally compared to luma. Spatial positioning of the subsampled chroma pixels varies depending on standard, but the differences are irrelevant when we work in the frequency domain, another advantage over spatial CfL prediction.

The coding process transforms blocks of luma and chroma pixels into frequency domain blocks of the same size. Our example above illustrates the arrangement of the luma plane and chroma planes using 4x4 blocks. Each chroma block covers the same area as four luma blocks.

To predict the frequency coefficients of a single chroma block, we need a single luma block covering that same area, so we TF the four co-located luma blocks into one. The resulting luma block covers the same spatial area as the chroma block, but with twice the horizontal and vertical resolution. Subsampling is much easier in the frequency domain; we simply drop the highest frequency coefficients that have no counterparts in the chroma block (or more accurately, we don't bother computing them in the first place). Now we have a luma block that matches our chroma block, and carry out prediction as above.

As with spatial domain Chroma from Luma, we need to fit the α and Β parameters. This is where our frequency domain version wins big; we don't need to consider all the AC coefficients in our least squares fit, we only need to consider a few, e.g. the three immediately surrounding DC.

As with all prediction, a powerful underlying technique is only a start. Tuning and refinement of the details can account for a substantial amount of final performance. At the moment, we use the linear model described above with no such refinements. That's work yet to be done.

| Chroma From Luma Prediction |

|

Above: An interactive illustration of Chroma from Luma prediction output on the test image 'Fruit'. The dropdown menu allows selection of the original image, Chroma from Luma prediction prediction using multiple blocksizes as selected by the encoder, prediction with only 4x4 or 16x16 block sizes, and DC-only prediction for comparison.

Remember when comparing images above that this is the output of the Chroma from Luma predictor only, not the encoder. The prediction doesn't have to look good, it only has to reduce the number of bits to encode.

Even in this early state (and despite the obvious places where it goes off the rails), our frequency-domain Chroma from Luma predictor in Daala lends solid subjective improvements when running in the full encoder.

Despite lending visual improvements, Chroma from Luma is a PSNR penalty in Daala. This is not particularly surprising, as neither PSNR nor any of the other common objective quality measures used in video coding represent color perception well, but it is especially interesting as the similar lack of PSNR performance in HEVC testing would certainly have contributed to it being dropped from the final HEVC standard. Perhaps the joint working group might have overlooked that if the spatial version of Chroma from Luma had not also been an apparent performance penalty. I speculate here mainly because our frequency domain implementation is substantially faster than classic intra prediction, not slower.

Aside from research inertia, and the black eye of spatial Chroma from Luma failing to make the HEVC cut, other barriers likely hindered discovery of the frequency-domain version of Chroma from Luma until now. The biggest: other researchers didn't have TF, and so there was no practical way to reconcile block sizes of 4:2:0 video in the frequency domain before prediction. In addition, the h.264 and HEVC transforms don't have uniform output scaling, so the linear model doesn't quite hold after transformation.

Some serious work on frequency-domain Chroma from Luma remains, specifically the fiddly task of tuning its practical performance, especially in those cases where it does not (and cannot) work well. I'll have an update eventually, but for the next demo page we'll move on to another technique pioneered in Opus: Pyramid Vector Quantization.

—Monty (monty@xiph.org) October 17, 2013