It's worth mentioning surround encoding improvements in 1.1, partly because quite a few people are unaware that Opus even supports surround audio. It does— up to 255 channels, same as Vorbis. Also similar to the early Vorbis releases, surround encoding in Opus 1.0 was mostly untuned. The quality was good, but it was not particularly efficient.

The 1.1 release includes several simple but significant surround encoding upgrades, mostly involving smarter use of bit allocation.

Unlike Vorbis, Opus only supports coupling channels into stereo pairs. Surround streams consist of a collection of stereo and mono substreams bundled together.

Previously, bit allocation in a surround stream was about as simple as possible; every channel got the same number of bits to start, whether it was mono, part of a stereo pair, or LFE. In addition, these assignments were inflexible; channel bit allocation wasn't adjusted according to the content it was carrying. Even an inaudible channel carrying near-silence got the same allocation (though later decision making could effectively turn off channels carrying true digital silence).

Libopus 1.1 uses a better scheme that's still relatively simple. For requested bitrates over 240kbps, every substream (whether it's stereo or mono) gets 20kbit up front (except LFE, which gets 3.5kbps). Below 240kbps, the 20kbit allocation is scaled proportionately. Remaining bits are then evenly divided amongst channels. This means that at at the lowest bitrates, stereo and mono substreams begin with the same base rate. As the overall bitrate increases, stereo substreams receive twice the additional bits of mono streams so that at high rates, stereo substreams asymptotically approach having twice as many bits as mono.

In addition, these allocations are fungible in 1.1. Further content analysis, such as the stereo saving, surround masking, and tonality estimation below, can adjust bit allocation between streams.

Opus 1.0 used SILK mode to encode the LFE channel at low rates, and transitioned to CELT mode at higher rates. SILK mode is not capable of as high quality for the LFE as CELT is, however 1.0's CELT mode was unable to encode only the low frequencies efficiently, so SILK mode was used at low rates to save space. Improvements in the encoder infrastructure allow us to use CELT mode with a limited number of bands to improve the encoded quality at low rates, and greatly reduce the LFE channel usage at higher rates.

Surround masking takes advantage of cross-channel masking between free-field loudspeakers. Obviously, we can't do that for stereo, as stereo is often listened to on headphones or nearfield monitors, but for surround encodings played on typical surround placements, there's considerable savings to be had by assuming freefield masking.

Note that these are the newest changes in the 1.1 beta and are likely to see considerable additional tweaking between now and the first release candidate. The 1.1 beta (this release) currently adjusts allocation according to a simple average of log band energy across channels. This is a rather naïve measure that does not adjust based on per-band content, and could produce poor results if a channel's audible content is predominantly in only one or a few bands. As such, we're already playing with additional tunings. Do stop by and help with listening tests!

|

Bitrate adjustment based on surround masking

|

|

|

total bitrate

|

|

| download these samples: | [ | Tron | | | ...Opus 1.0 | | | ...Opus 1.1 beta | ] |

| [ | Sita | | | ...Opus 1.0 | | | ...Opus 1.1 beta | ] |

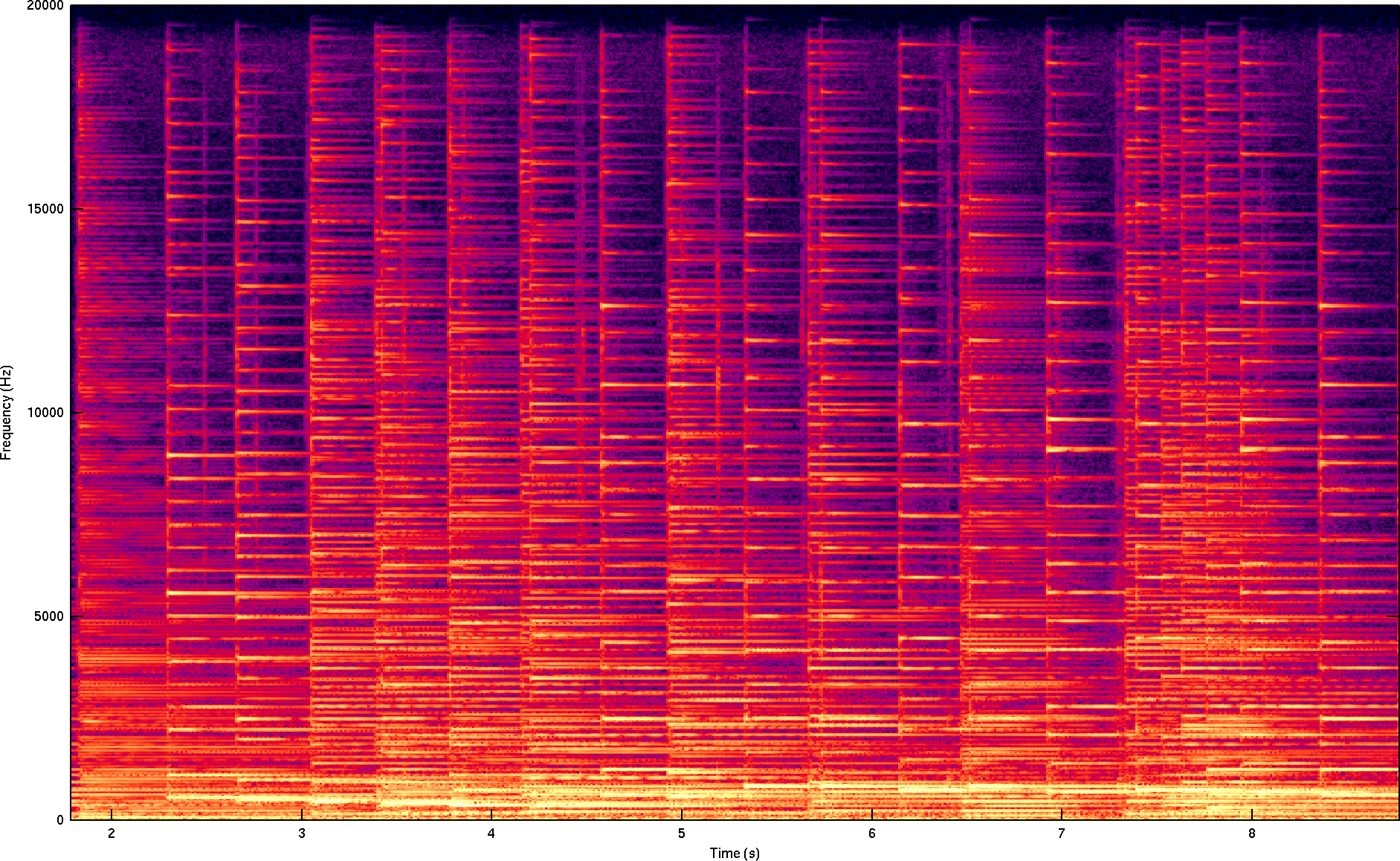

Opus is a low-delay codec, and uses a shorter MDCT window than non-realtime audio codecs such as Vorbis or AAC. A short MDCT window has higher spectral energy leakage between bins, and this leakage decreases coding efficiency for strong tones. Samples that contain strong tones with a large number of harmonics that stand well above the noise floor are thus especially difficult.

In addition, typical bit allocation schemes (such as used by Opus) reduce the precision of high-frequency bands, a strategy appropriate for the vast majority of audio. If the precision drops too low however, strong harmonics in the upper frequency bands wash out into an indistinct, fuzzy hiss.

The harpsichord is a good example of both concerns. It is both strongly tonal, and produces exceptionally strong harmonics into the upper limits of the audible spectrum. A harpsichord was, in fact, the sample on which Opus 1.0 performed worst in the 2011 64 kb/s HydrogenAudio listening test.

The first step toward improving tonal samples is identifying them, so libopus 1.1 adds a tonal estimator to calculate the realtime tonality of the audio. The idea is simple; we compute a short time FFT, calculate the phase of each frequency bin, and compare this to previous FFTs. The phase of a continuous tone varies linearly (it is not constant unless the tone is perfectly aligned with a bin, which is unlikely), so our continuity estimate is:

![<i>C</i>(<i>t,f</i>) = [<i>φ</i>(<i>t</i>-1,<i>f</i>) -

2<i>φ</i>(<i>t</i>,<i>f</i>) +

<i>φ</i>(<i>t</i>+1,<i>f</i>)] mod 2*π](tonality_phase1.png)

The closer C(t,f) is to zero, the more likely we are to have a tone at that frequency. When we find tones in many regions of the spectrum, we consider the frame to be tonal and increase its bitrate.

|

Bitrate adjustment based on tonality estimation

|

||||||||||||||

|

total bitrate

|

|||||||||||||

| download these samples: | [ | harpsichord | | | ...without tonal estimation | | | ...with tonal estimation | ] |

| [ | steam engine | | | ...without tonal estimation | | | ...with tonal estimation | ] | |

| [ | 80s Rock | | | ...without tonal estimation | | | ...with tonal estimation | ] |

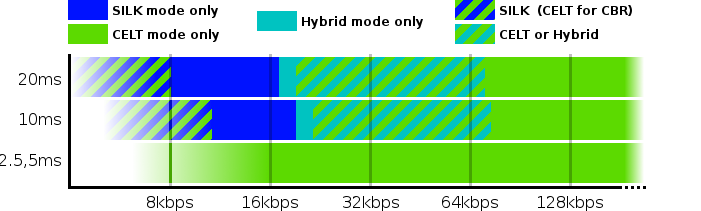

Opus is built from pieces of what began as two separate codecs: the SILK voice codec and the CELT music codec. These were combined into into Opus, a single codec that can draw on the tricks of each. Internally, Opus can operate in SILK-mode, CELT-mode, or combine the two into hybrid operation.

In previous releases, the developer or user needed to manually set the operating mode to match the audio being encoded for optimal performance. As of 1.1, libopus analyzes the audio content in realtime and dynamically selects the correct mode on the fly.

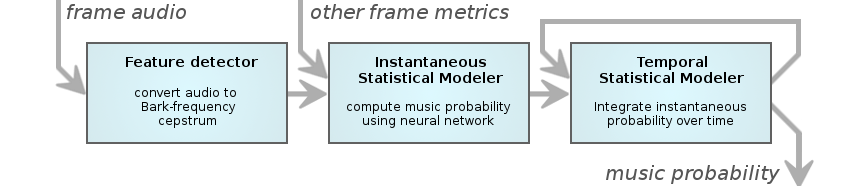

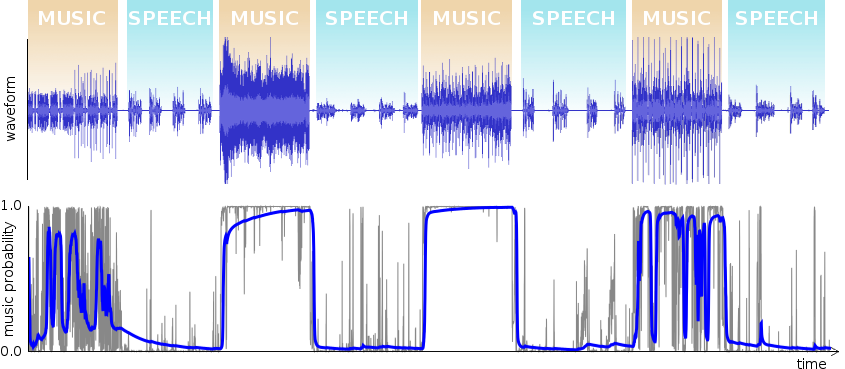

The voice/music detector in libopus 1.1 has three components; a feature detector, an instantaneous modeler, and a temporal modeler.

The first component is a feature detector that converts the input audio into Bark-frequency cepstral coefficients (BFCC) — similar to the Mel-frequency cepstral coefficients (MFCC) used in speech recognition — along with the coefficients' first- and second-order derivatives. In addition, we also use the tonality estimate we computed for VBR above and several other previously computed metrics.

The statistical modeler is a multi-layer perceptron (MLP), aka neural network. It's worth mentioning that we could have used (and did try) the more usual Gaussian mixture models, but in the end, a neural network worked better. This could be due to the fact that neural networks return a meaningful continuous probability by design, a fundamental requirement for the final probability computation below.

The neural network outputs the probability of the current frame being music given the instantaneous observation at time t. Naturally, this signal is quite noisy and we can assume that it's highly unlikely our input signal would alternate between voice and music every few ms. We model the audio behavior as a Markov model, assuming the probability of transitioning from voice to music (and vice versa) in a given 10ms period is .00005. That works out to a transition once every 200 seconds on average.

A temporal statistical modeler, based on the Markov model, weights and integrates the neural network's instantaneous probability output over time. Finally the encoder compares the integrated probability against a switching threshold that depends on bitrate, bandwidth, frame size, and application to determine the coding mode.

|

Realtime automatic mode switching

|

||||

|

||||

| download these samples: | [ | speech / music test sample (original) | | | ...45kbps Opus | ] |

When operating with a low-latency constraint, Opus has to make decisions without any look-ahead. This makes the decision process harder than it would otherwise be, because we can't look into the future to confirm the correctness of a decision. Fortunately, mode decision errors in Opus tend to be rather 'soft' due to the codec's continuous operating range; incorrect speech-vs.-music decisions nearly always happen where either mode will do a reasonable job, as the demo above shows.

Since the 1.1 alpha, libopus can optionally use lookahead in those applications where ultra-low latency isn't important. This delayed decision mode can, as mentioned above, look forward into buffered audio to make mode selection decisions based not only on past speech-vs.-music probability, but also future speech-vs.-music probability. As such, it is able to make higher-confidence mode decisions slightly sooner. Note that this feature is not yet implemented in opusenc, so no fancy demo... yet.

The 1.1 release contains several other improvements. They're less bloggogenic, but there's no reason to neglect mentioning them entirely.

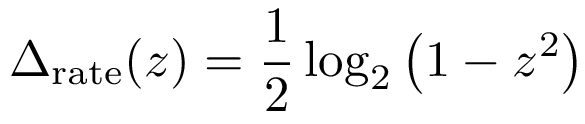

Mid/side stereo encoding is an easy way to improve compression in the most common case, when the left and right (or any two) channels are similar. When the left and right channels are completely different or only slightly correlated, then mid-side coding provides little improvement. When the left and right channels are highly correlated — nearly identical or actually identical — then the efficiency increase approaches a factor of two. Obviously, quantifying exactly how much savings we can extract from coupling is useful to informing VBR rate allocation.

The relation turns out to be simple. For a left-right correlation z, we have:

Delta represents the optimal change in quantization resolution in bits/sample. We cap the actual allocation decrease to a maximum that depends to the number of frequency bins actually coded in mid/side stereo; see /* Stereo Savings */ in celt/celt_encoder.c for the details.

Opus does not explicitly transmit quantizers for each band, nor does it have an equivalent of MP3's scalefactors or Vorbis's floor curve. Instead, Opus transmits the energy of each band, then determines quantization for each band using a fixed table-driven algorithm. The number of bits saved by not encoding scalefactors or a floor almost always outweighs the inflexibility of the fixed allocation by a substantial margin.

That said, there are cases where the fixed allocation is suboptimal, and the Opus bitstream allows the encoder to signal modifications to the fixed allocation, dynamically granting more bits to specific bands that need it. Libopus 1.1 implements dynamic allocation for the following two cases:

In both cases, dynamic allocation grants more bits to the specific affected bands. This reduces stray spectral leakage by increasing the precision of the coded representation.

The 1.1 encoder now uses a built-in DC rejection filter (3Hz cutoff) for all modes. The effect of the filter itself is inaudible, but it prevents DC energy from polluting the masking and energy analysis of the lowest frequency bands.

Previous Opus encoders offered only CBR and ABR with a short time window. As of 1.1, the encoder implements true unconstrained VBR. When using VBR, the bitrate option selects a VBR mode that hits the approximate requested bitrate for most samples (exactly the same as in Vorbis). The encoder has the freedom to use more or fewer bits at any time as it sees fit in order to maintain consistent quality.

1.1 also adds temporal VBR, an accidental discovery from a bug in an earlier pre-release. Temporal VBR is new heuristic that adds bits to loud frames and steals them from quiet frames. This runs counter to classic psychoacoustics; critical band energies matter, not the broadband frame energy. In addition, TVBR does not appear to be exploiting temporal postecho effects.

Nevertheless, listening tests show a substantial advantage on a number of samples. I'll be the first to admit we haven't completely determined why, but my best theory is that strong, early reverberant reflections are better coded by the temporary allocation boost, stabilizing the stereo image after transients especially at low bitrates.

Going into detailed analysis of 1.1's speed improvements could fill an entire demo, but it's worth mentioning it's not just a little bit faster. For example, 64kbit/sec stereo decode on ARM processors is currently 74% faster (42% less time) and encode is 27% faster (21% less time).

And we haven't even started using NEON yet.