Vorbis has always been able to encode surround sound. From the beginning the specification has allowed 255 channels and the encoding/playback libraries [and tools] support an arbitrary number of channels. All of the reference Vorbis software has also supported arbitrary 'couplings', which allow multiple channels to be inter-encoded to reduce encoding redundancy. That said, quite a bit of optimization remains to be done in the encoder for efficient surround encoding as the reference Vorbis encoder has used channel couplings only on stereo samples. Samples with more than two channels are encoded with all channels discrete.

One reason to tackle surround coupling implementation in Vorbis now is to inform resumed development of the Ghost codec. Several possibilities for surround implementation in Ghost are on the table (including the possibility of not coupling channels at all and using Ambisonics as Ghost's native internal 'coupled' representation).

Although the various layers of Xiph tools and libraries have always passed through arbitrary numbers of decoded audio channels, none of the layers has specified official behavior as to channel location/ordering until very recently. Vorbis itself has just added official 6.1/7.1 channel ordering to the spec (following the same ordering pattern as it had up to 5.1, that is, following Dolby's AC3 ordering).

When Vorbis was new, no mainstream platform offered an official specification with respect to ordering surround channels. Today, all major OS platform including Linux at least try to address channel ordering in a consistent fashion. Vorbis officially specifies channel orderings up to 7.1 surround, and vorbis-tools (upcoming 1.4.0 release) and libao (upcoming 1.0.0 release) have also been updated to specify channel ordering behavior. This is all toward making sure that, for example, the left rear speaker always plays the left rear channel and it doesn't randomly come out the center speaker instead.

The vorbis-tools and libao work for channel ordering is complete in SVN, but a little more work is still needed on both before release (primarily testing and addressing the remaining bug queue). Vorbis-tools utilities now automatically map channels between file formats. Libao adds an optional 'matrix' field to the ao_sample_format struct to declare the channel ordering in streams submitted for playback. Libao has also been updated to make proper use of channel mapping mechanisms in all drivers for Linux, *BSD, Solaris, Windows and MacOSX (pending a few commits).

It is worth noticing on Linux that not only will order vary depending on playback backend (Pulse, ALSA, OSS) but it will probably also be different for different applications, or even for the same application playing different samples (eg, the orderings may be correct for the WAV but scrambled for the Ogg in, eg, some mplayers). In general, Linux application and backend developers are now keenly aware of and handling this problem in modern releases, but it will take a little while for this new awareness to replace an awful lot of old software. There's also a ton of broken channel/mixer mappings in various ALSA drivers to be found and fixed.

Vorbis supports arbitrary channel 'couplings', which allow multiple channels to be inter-encoded to reduce encoding redundancy and further eliminate inaudible or unimportant imaging information. The coupling mechanism is described in "Ogg Vorbis stereo-specific channel coupling discussion", As the title suggests, this doc only addresses the practical considerations of the single-depth stereo coupling case. To date that's the only case the encoder implements.

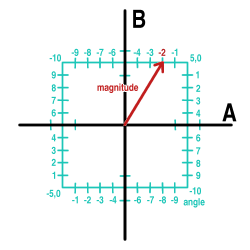

The Vorbis 'greater than stereo' coupling mechanism is conceptually simple. Looking at coupling from the encoder side, pairs of channel residue vectors (two 'magnitudes') are coupled together in-place into two new vectors (a 'magnitude' and an 'angle') using square polar mapping. In the stereo case, this is done once with the Left and Right channels. The results are then encoded.

An example of square-polar mapping in Vorbis channel coupling from "Ogg Vorbis stereo-specific channel coupling discussion". In this example, the 'A' vector element value is 3 and the 'B' value is 5. Square polar mapping results in a 'magnitude' value of 5 and an 'angle' value of 2.

The intent of square polar representation is to convert a pair of correlated magnitude vectors into a single high-resolution 'magnitude' vector and less critical variable resolution 'angle' vector without requiring trigonometry.

Stereo is the simplest coupling case (beyond no coupling at all); a pair of vectors (Left and Right) are coupled together in-place into two new vectors Magnitude and Angle. Because the floor vectors usually differ, often substantially, magnitude/angle is not equivalent to mid/side.

In the stereo case, coupling is performed once with the Left and Right channels, converting them to 'Magnitude' and 'Angle'. It's not hard to see that in the case of more than two channels (let's assume four channels, ie, quadraphonics), coupling can be performed separately on the front and back stereo pairs. Or, instead of coupling front left to front right and back left to back right, front and back left could be coupled and front and back right coupled. Either of these cases result in two magnitude and two angle vectors.

In a stereo sample, coupling is performed once to tie together the left and right channels. It is easy to see that in quadraphonics the same technique can be applied twice, treating combinations of the four channels as two stereo pairs in a depth=1 coupling.

Beyond that, we can also couple the two magnitude vectors that result from the depth=1 coupling into a depth=2 coupling. From here, it is easy to see the full generalization of the Vorbis channel coupling system to arbitrary combinations of vectors at any depth. Channels are successively coupled in-place after floor removal and before residue encoding. (Note that angle vectors can just as easily be coupled as well; it is up to experimentation to decide the modelling and practical usefulness of such an operation).

Vectors resulting from a depth=1 coupling can themselves be subsequently coupled into a depth=2 coupling, and so forth. At left are some depth=2 possibilities from further coupling the depth=1 results above; the final vectors [with a gray background] are then coded to the stream. These are not the only possibilities as any arbitrary coupling arrangement is allowed; these examples are merely the most likely to be sensible.

After coupling (if any) individual residue vectors are bundled in groups called 'submaps'. Submaps allow different sets of vectors to be encoded with different parameters, or to make it easy to reuse the same encoding parameters on multiple sets of similar vectors.

For example, sticking with our above quadraphonic example, the simplest way to 'optimize' quadraphonic encoding over simply encoding four discrete channels would be to encode the stream as two sets of stereo channels. The two stereo pairs would be grouped into two stereo submaps, and in this way the submaps could use codebooks constructed for stereo encoding directly. This strategy applies to 5.1 and 7.1 just as easily, where a mix of discrete channel submaps and stereo submaps could achieve substantial savings over a discrete-only encoding without requiring substantial additional mechanism or tuning over basic stereo streams.

The LFE channel ('Low Frequency Effect') is a discrete channel meant to separate high-amplitude bass effects out of the other channels in order to improve their available headroom. The LFE channel in most systems is lowpassed to 120 or 250Hz, though it may also be a full bandwidth channel. AC3 imposes a 120Hz lowpass; For Vorbis, I suggest 250Hz (which will already be already smaller than a single residue partition, so there's no sense in setting it lower).

The LFE can be encoded in a submap of its own. Although Vorbis is relatively efficient at not wasting bits where there is no spectral content to encode, there are still 'overhead' bits to encoding a mostly-unused full bandwidth channel. By using a submap with a custom floor and only a single residue partition, The bitrate of an LFE channel can be dropped from ~ 15kbps (-q3, discrete, typical test input) to under 3kbps practically for free without much effort.

By way of illustrating submap usage, existing stereo coupling mechanisms in the Vorbis encoder can be pressed into service to provide some surround coupling optimization without code changes. Although this approach is 'cheating' in a sense and it's possible to do much better, it is actually roughly comparable to channel matrixing mechanisms in other codecs like AC3, where stereo surround channels are multiplexed into a bistream 'slot' originally allocated to a single discrete channel.

The image at left illustrates from top to bottom, mono streams which obviously do not use any coupling, stereo streams which use a single coupling, quadraphonic streams which could be implemented naively as two stereo couplings, and 5.1 streams which could be implemented as a single 'center' channel, two stereo couplings in separate submap bundles, and a mono bandwidth limited LFE submap. 7.1 could be handled similarly as three stereo submaps, one center (mono) submap and an LFE submap). Although simple, this still would still realize substantial bitrate savings.

The only practical reason to consider these simple couplings is for experimentation and to avoid the analysis complexities caused by coupling depth>1. More on this below.

A few samples illustrating the simple 'stereo abuse' technique in action. Don't look too hard at the internal structure of these Oggs for correctness; they're quick-and-dirty tossed together setups to illustrate the point. Note that the originals of both clips have a number of oddities in their surround mixes.

HA HA! DISREGARD THAT... Unfortunately I found a corruption bug last night at about 3am and decided to pull down the samples as I was sure at least one of them had been hit and the quality suffered because of it. It's just a bug, I'll have them back up soon. 'Quick and dirty' just like I said.

'Tron' closing credits music clip (5.1/48kHz encoded at q3)

'Sita Sings the Blues' music/SFX clip (5.1/48kHz encoded at q3)

Angle vectors resulting from coupling steps do not lose any information and are not lower resolution than the magnitude vectors (in fact, they're the same resolution and double the dynamic range). However, angle resolution is not as perceptually important as magnitude resolution. Depending on frequency band and signal magnitude, we can lose a significant amount of angle resolution inaudibly. We can lose quite a bit more than that with audible but benign results. The coupling system was designed with this in mind.

The original Vorbis coupling documentation refers to 'point' and 'phase' stereo, describing the state of the early beta encoders. The modern Vorbis encoder mixes three coupling types that do not directly map onto the behavior of the beta encoders. The first two are elliptical and dipole stereo, both variants of preserving no phase information whatsoever, and the second is lossless channel coupling. These three represent the extreme possibilities of coupling, where either no angle information is preserved, or all of it is preserved as in the uncoupled state (but hopefully further decorrelated and compacted).

Elliptical (also called 'point' stereo within the source) is a coupling that discards the angle vector entirely. It is used only for very low-energy spectral lines that represent noise. The energy threshold governing its use is adjusted within the encoder according to frequency and selected encoding quality. Elliptical stereo is used more readily at frequencies where the ear is relatively insensitive to phase/location.

The 'elliptical' aspect of point stereo has to do with the fact that the floor vectors (and thus narrowband baseline energies) of two bands are seldom equal. Because a quantized unit value in each residue vector does not represent equal energy, a magnitude vector of equal energy sweeps an ellipse rather than a circle.

Magnitude vector energy in elliptical stereo must not directly use the naive square polar mapping result, lest the coupling step always add energy. The fact that a square is square and not round is the first hint that changing the angle of the magnitude vector also changes its energy, but it is perhaps easiest to see by way of example.

If A has a value of 0 and B has a value of 5, the lossless coupling result would be magnitude=5, angle=-5. However, if the angle is discarded, we see that upon uncoupling, both A and B will be set to 5, a net increase of energy. If the floors are equal, the energy will increase to 7.071. Therefore, if quantization is to be done prior to coupling, magnitudes must either be adjusted prior to quantization such the average quantization error yields no net energy gain, or the energy gain tracked and explicitly normalized, eg, as part of noise normalization below.

The other option (the option used by the Vorbis encoder) is to simply quantize after point coupling. However, this has its own disadvantages, as it results in the encoder quantizing before lossless stereo coupling, but after point stereo coupling. This causes trouble when implementing noise normalization, a process that is commingled with quantization.

A variant of elliptical stereo where out-of-phase signals are allowed to cancel, and in-phase signals allowed to reinforce. This is used primarily to replace elliptical stereo at lower frequencies. Why? Primarily because it ends up sounding better in testing. I don't have a theoretical justification for the effect.

Like with elliptical stereo, the potential energy gain resulting from discarding the angle vector of square polar mapping must be accounted for. (Square polar mapping does not in any way simplify the encoder; if anything it's something of a complication. It does, however, simplify the decoder).

Lossless stereo is exactly what it sounds like; quantized values are coupled losslessly into a magnitude/angle vector pairs from which there original uncoupled quantized values are recovered exactly in the decoder. Quantizing after lossless coupling results in greater overall error than quantizing before coupling, so quantization should precede lossless coupling. The fact that lossless coupling should ideally follow quantization, but lossy coupling best precedes it, is the source of a number of practical headaches, especially considering that noise normalization must commingle with the quantization process. Reconciling fragmented quantization and normalization that performed in multiple steps, mixed throughout the spectrum, is the primary complication to depth>1 coupling in the current Vorbis encoder.

Vorbis's singular greatest achievement in the art of audio encoding was demonstrating conclusively that maintaining narrow- and mid-band energy is far more important to perceived sound quality than minimizing global error. To that end, Vorbis uses 'noise normalization' to inspect and adjust post-quantization narrowband energy level. In bands where quantization has severely affected total energy, residue values are promoted or demoted to adjust the narrowband energy level. This is of greatest importance when low-bitrate quantization could otherwise cause large stretches of the spectrum to collapse to zero energy.

Noise normalization interacts with quantization and quantization is handled differently in the elliptical and lossless stereo cases. Because elliptical stereo quantizes after coupling, noise normalization must follow elliptical coupling. However, lossless coupling must quantize, then couple which implies that noise normalization must be performed before coupling. The original Vorbis encoder's stereo encoding modes implements a working compromise, however the compromise used in stereo to this point is not workable in coupling depths > 1.

Beyond quantization timing issues, there are also a number of unanswered questions with regards to tuning noise normalization behavior. Anyone who has studied the Vorbis source has noticed that at present, it is primarily used as an energy promotion system rather than properly adjusting energy both up and down as needed. Also, it is disabled in the presence of any strong signal content for a number of reasons. In short, there are numerous disconnects between the theoretical model of noise normalization and the practical implementation.

The first cut of surround support does not attempt to address these questions; it looks to extend to coupling depths greater than one behavior equivalent to the current noise noise normalization at depth one. After the first cut is realized, it is worth revisiting new insights into more adequately modelling noise normalization.

It is obvious and already demonstrated that additional stereo-coupled pairs within a surround bitstream yield significant bitrate savings with little downside. But which stereo coupling choices are best for depth=1? And beyond depth=1, which further couplings are likely to yield the best results?

The goal of the next two weeks (after sorting out the logistical depth>1 quantization/normalization headaches in the encoder) is to establish via listening test first the optimal depth one couplings, and then the optimal couplings at greater depth (if any).

Monty's Vorbis surround coupling work is sponsored by Red Hat Emerging Technologies.

Monty's Vorbis surround coupling work is sponsored by Red Hat Emerging Technologies.